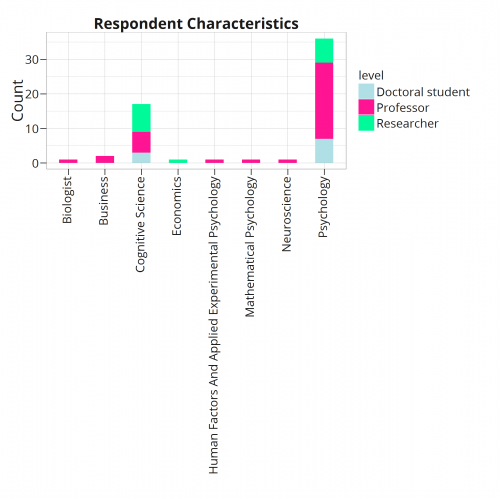

The survey Process Models in Judgment and Decision Making is an expert survey we conducted to find out about researchers' opinion about the term "process model" and whether it applies to several models in the field. The main objective was to find out whether researchers'...

Inter-rater reliability

Inter-rater reliability, R-code / 24.12.2014